The AI Black Box Problem

Can We Trust AI Models We Don’t Understand?

The term "AI black box problem" describes a fundamental challenge in artificial intelligence, which is the lack of transparency or openness in how complex machine learning models, particularly deep learning systems, arrive at their conclusions.

It’s like eating a dish from a chef who refuses to tell you the recipe; you get the result, but no explanation. That’s what happens when we rely on black-box AI systems. They give us decisions that can be medical diagnoses or legal advice, but we often have no idea how they arrived at those decisions. This is called the AI Black Box Problem.

Technical Origins

The black box problem began with the design of modern artificial intelligence. At its heart, the issue comes from how today’s most advanced AI models, particularly deep learning systems, are built and trained. These models, like neural networks, learn by analyzing huge amounts of data to find patterns and connections that are often too complicated for humans to understand. For example, a deep neural network includes many hidden layers of connected nodes that carry out a sequence of complex, non-linear operations on the data. Although this complexity enables the models to achieve high accuracy and find new solutions to problems, it also makes it very difficult to trace the exact steps or reasoning behind a specific outcome. Even the creators of these systems may not be able to fully explain how they work internally.

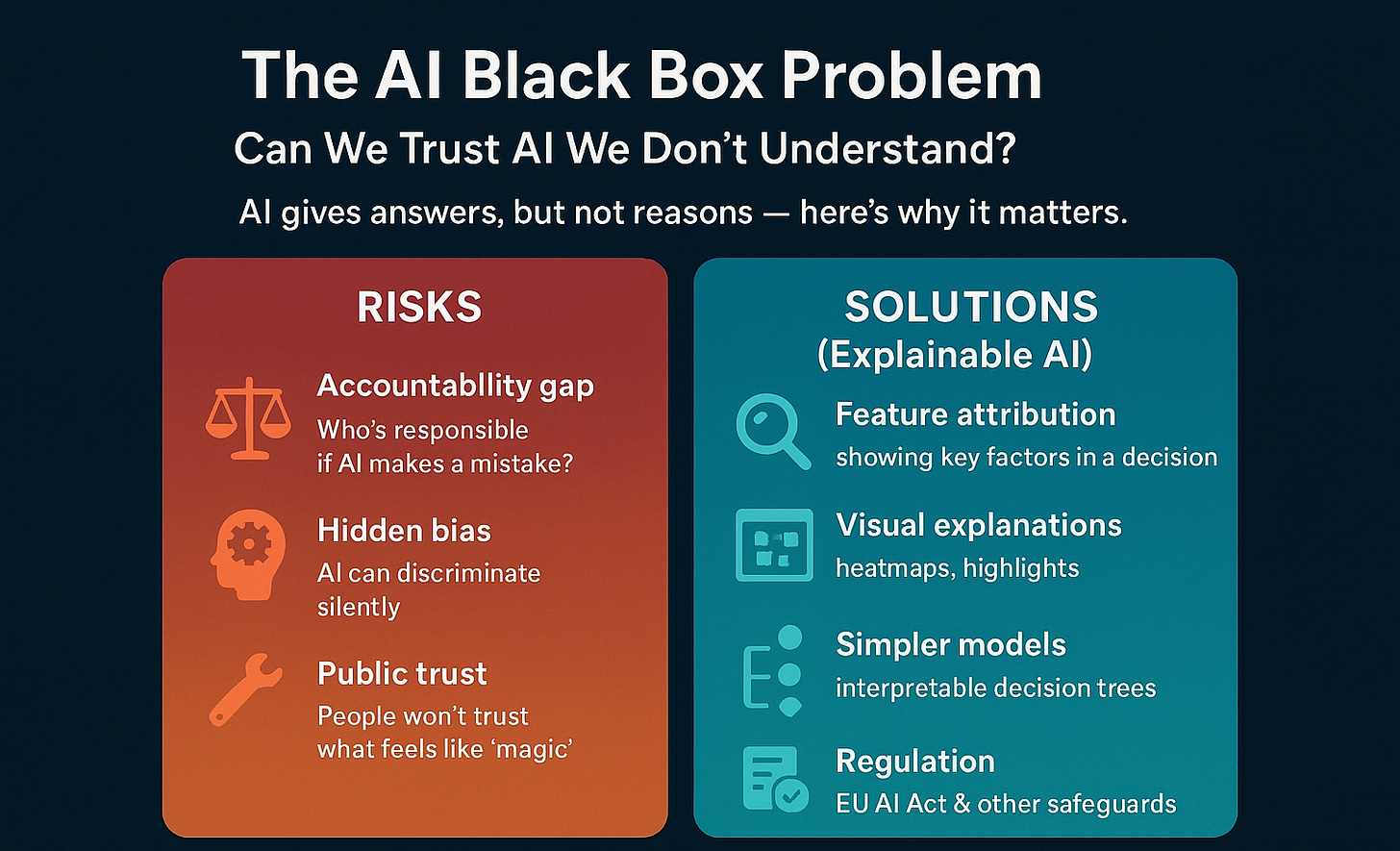

Why does it matter?

1. Accountability and the Culpability Gap

A major issue brought about by the lack of transparency in AI is the difficulty of assigning responsibility when an AI system produces harmful or incorrect outcomes. Without a clear understanding of how the AI makes decisions, it is difficult to identify who or what should be held responsible for the consequences. This situation leads to a "culpability gap," where harm may occur, but it is unclear who should be held accountable.

2. Ethical Concerns and Bias Amplification

The black box problem raises profound ethical concerns, particularly regarding bias and fairness in AI. AI models learn from the data they are trained on, and if that data contains historical or human biases, the models can inadvertently perpetuate or even amplify these inequalities. The black box nature of the model makes it extremely difficult to detect and correct these underlying discriminatory patterns. For example, a system designed to screen job candidates could filter out qualified female applicants if it were trained on historical hiring data that skewed male. The model's reasoning would be opaque, leaving the user with no recourse to challenge the outcome or identify the source of the bias.

3. Debugging and Maintenance challenge

From a practical standpoint, the opacity of black box systems poses a significant challenge for developers and operators. When a system produces an unexpected or unwanted outcome, the lack of visibility into its internal workings makes it very difficult to pinpoint exactly where it went wrong and how to fix it.

Examples of the Black Box problem

1. Healthcare: The Risks of Misdiagnosis and Algorithmic Bias

In healthcare, the AI black box problem is especially worrying because it directly affects how patients are treated and kept safe. When an AI makes a diagnosis or suggests a treatment but doesn't explain its reasoning, it's hard to check if the decision is correct. This lack of clarity makes hospitals and health organizations hesitant to use AI, as they can't easily hold someone responsible if something goes wrong.

2. Finance: Systemic Risks and the Demand for Transparency

The financial industry, which involves high levels of risk and responsibility, is particularly vulnerable to the black box problem. This occurs when complex algorithms operate without transparency, potentially causing major system failures. On a personal level, these black box models are commonly used in credit scoring and fraud detection. They can deny a loan application or stop a transaction without giving a clear explanation. This lack of transparency can result in serious financial losses for customers and create legal issues for financial institutions.

3. Hiring:

Resume screening tools filtering candidates without clear reasons — possibly amplifying bias. Amazon once scrapped an AI recruitment tool because it was quietly biased against women.

The Path to Enlightenment: Explainable AI (XAI)

The primary response to the black box problem is the emerging field of Explainable AI (XAI). This field aims to develop methods and techniques that provide transparency and understanding into the rationale behind AI decisions. Explainable AI (XAI) describes why a model made a prediction. It is a set of processes that help a user comprehend and trust the output of a complex, opaque "black box" model.

Despite the promise of XAI, it is not a perfect solution and has significant limitations. The explanations generated by XAI tools can be inconsistent, misleading, or computationally intensive. The most advanced methods often require a high degree of technical expertise to understand, making them inaccessible to laypeople. Crucially, XAI techniques provide explanations after the fact, rather than making the models themselves inherently more understandable from the start.

Perhaps the most profound limitation is that XAI tools can create a "false sense of understanding". Simply because a model explains does not guarantee that it is fair or that it should be trusted. It should be known that a model can be fully explainable yet still be biased.

In conclusion, the black box problem is a big challenge for the AI age, but it's not something we can't solve. The future of AI depends on our ability to stop relying on trust without question and to create systems that are robust, accurate, and also open to review, fair, and aligned with human values. We need to shift from talking about trust in a general way to asking for real, verifiable transparency. By employing a combination of technical, ethical, and legal approaches, we can develop a more robust, responsible AI system. This is the key to smart and ethical progress.

The Big Question:

Airplane passengers don’t fully understand aerodynamics, yet they trust planes. Should AI be treated the same way? Or is AI different, because it directly shapes human rights, justice, and fairness?

Thanks for reading.

Do not forget to like, share, comment, and subscribe:

Really insightful piece. Thank you